Hibernate知识集锦

在Hibernate 3.6.x中:

get会返回实际的对象实例,如果不存在则返回null,session.get(Superclass.class,id)与session.get(Concreteclass,id)的返回值完全一致。

load则返回一个生成的代理(类名以类似_$$_javassist_*结尾),即使给定ID的对象不存在也可以得到代理实例,只有第一次访问其非ID属性时才会从数据库寻找实例,找不到抛出异常。代理的父类就是session.load(Class.class,id)中指定的类,如果在多态映射时不指定具体类型,则不能cast为实际类型。

指定ManyToOne关的FetchType为Lazy时,可能导致关联实体使用类似于load的方式加载(调用SessionImplementor.internalLoad方法),除非指定为其指定注解:@NotFound ( action = NotFoundAction.IGNORE ),相关的Hibernate代码:

protected final Object resolveIdentifier(Serializable id, SessionImplementor session) throws HibernateException {

boolean isProxyUnwrapEnabled = unwrapProxy &&

session.getFactory()

.getEntityPersister( getAssociatedEntityName() )

.isInstrumented( session.getEntityMode() );

//EntityType是ManyToOneType的父类,后者的isNullable() == ignoreNotFound,对应@NotFound注解

Object proxyOrEntity = session.internalLoad(

getAssociatedEntityName(),

id,

eager,

isNullable() && !isProxyUnwrapEnabled

);

if ( proxyOrEntity instanceof HibernateProxy ) {

( ( HibernateProxy ) proxyOrEntity ).getHibernateLazyInitializer()

.setUnwrap( isProxyUnwrapEnabled );

}

return proxyOrEntity;

}

public Object internalLoad(String entityName, Serializable id, boolean eager, boolean nullable) throws HibernateException {

// todo : remove

LoadEventListener.LoadType type = nullable

//如果设置NotFoundAction.IGNORE,则走该分支,不创建代理

? LoadEventListener.INTERNAL_LOAD_NULLABLE

: eager

? LoadEventListener.INTERNAL_LOAD_EAGER //不创建代理

: LoadEventListener.INTERNAL_LOAD_LAZY; //只有这个会进行代理的创建

LoadEvent event = new LoadEvent(id, entityName, true, this);

fireLoad(event, type);

if ( !nullable ) {

UnresolvableObjectException.throwIfNull( event.getResult(), id, entityName );

}

return event.getResult();

}

因此使用多态的ManyToOne时,要确保关联对象的类型准确,要么禁止延迟加载,要么启用延迟加载的同时打开 NotFoundAction.IGNORE

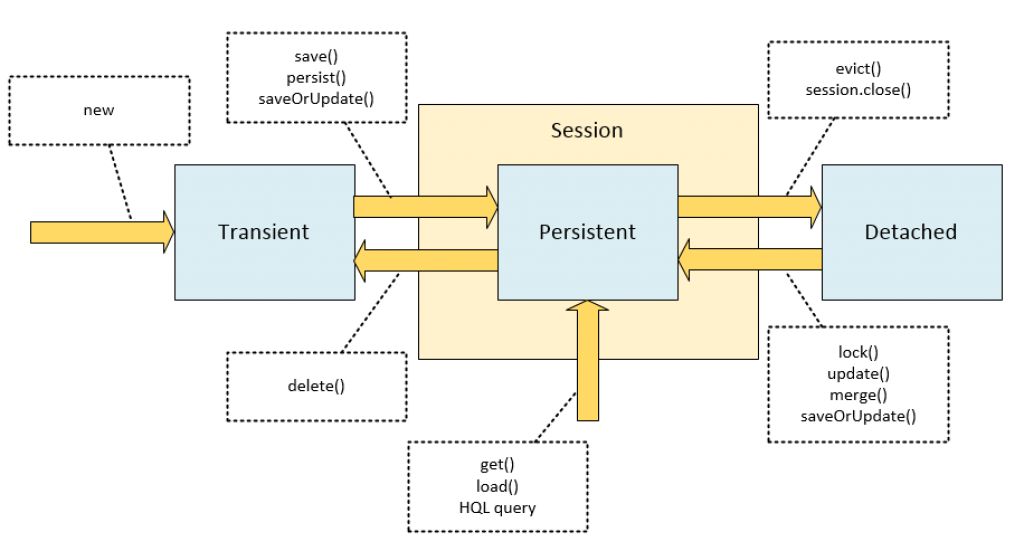

| 状态 | 说明 |

| New (Transient) |

新创建的对象,并且从来没有和Hibernate会话(持久化上下文,persistence context)关联过,没有和数据库表的一行关联 要将瞬时对象变为持久化对象,你需要明确的调用持久化API,例如persist |

| Persistent (Managed) | 已经关联到数据库表的一行,并且被当前Hibernate管理。对这类对象的任何修改都被自动检测到,并自动在flush期间入库 |

| Detached |

如果当前Hibernate会话关闭,则先前Persistent对象变为Detached,之后对实体的更新不会被跟踪因而不会自动入库 要把Detached对象重新关联到Hibernate会话,你可以:

对于配置了自动生成ID的实体类型,如果手工赋予了ID,会被看作是Detached,即使它是new出来的没有入库过的对象。对这种对象调用session.persist()会导致错误:detached entity passed to persist |

| Removed |

实体对象被从数据库中删除,实际删除动作在flush时发生 JPA要求仅仅Managed对象才可以被删除,但是Hibernate允许调用session.delete删除Detached对象 |

实体状态转换示意图:

本节以如下实体来描述常用持久化API的行为:

@Entity

public class Person {

@Id

@GeneratedValue

private Long id;

private String name;

}

注意下表中的API都不会立即导致SQL操作,SQL操作仅仅在flush时执行。

| session. | 说明 |

| persist | 用于将瞬时(new)对象转换为受管状态,例如:

Person person = new Person();

person.setName("Alex");

session.persist(person);

注意该方法的返回值为void,它是直接把入参对象纳入受管状态,而非进行对象状态的拷贝。它的语义严格遵守JSR-220规范:

|

| save |

该方法是Hibernate原始的持久化接口,不遵守JPA规范。其行为类似于persist但是实现细节不同 该方法执行后,立即返回为瞬时对象分配的id 如果对Detached对象执行调用save(),会生成一个新的id |

| update |

该方法是Hibernate原始的持久化接口:

|

| merge |

逻辑如下:

JSR-220的语义被遵守:

|

| saveOrUpdate |

Hibernate的特殊API,JPA中没有对应的接口 行为类似于update,可以被用来reattaching一个实例,不管它是transient还是detached |

报错信息:

org.springframework.orm.hibernate3.HibernateSystemException: identifier of an instance of *** was altered from 248178 to null; nested exception is org.hibernate.HibernateException: identifier of an instance of wnetJhInterface was altered from 248178 to null

at org.springframework.orm.hibernate3.SessionFactoryUtils.convertHibernateAccessException(SessionFactoryUtils.java:690)

解决:查询完成后,调用sess.clear();

HQL应该写成:prop is null。不能用参数: prop is :p 并把值传进去

报错信息:Position beyond number of declared ordinal parameters. Remember that ordinal parameters are 1-based! Position: 2

报错原因:位置参数第一个设置为0,和JDBC不一样,JDBC的索引以1开始

报错信息:Expected positional parameter count: ?, actual parameters ...

报错原因:未知,可以使用命名参数(named parameters)解决

String hql = "update user where id in (:id)";

sess = getSessionFactory().getCurrentSession();

Session msess = sess.getSession( EntityMode.MAP );

Query q = msess.createQuery( hql.toString() );

List<Object> params = new ArrayList<Object>();

for ( Map<String, ?> d : dataSet.getAllData() )

{

params.add( d.get( "id") );

}

q.setParameterList( "id", params );

报错信息:org.hibernate.type.SerializationException: could not serialize

报错原因:某些时候Hibernate需要使用Java串行化机制来序列化对象,因此,必须在注解@Transient的同时,为字段添加transient关键字,这样Java串行化机制才能忽略该字段。

@Inject @Transient @JsonIgnore private transient ServiceHelper service;

- 非实体属性不能存在于HQL的SELECT或者WHERE子句中

- 对于任意多态映射(@Any),即使关联的所有类型的实体均有属性A(通过@MappedSuperclass指定),A亦不能出现在SELECT或者WHERE子句中

在Hibernate 3.6.x中,使用任意多态映射时,如果其中某个关联目标是一个实体类层次,例如:

@AnyMetaDef (

name = "adminMetaDef",

idType = "integer",

metaType = "integer",

metaValues = {

@MetaValue ( value = "1", targetEntity = Child.class ),

//如果Org是一个抽象实体类,其下面有若干个具体实体子类,则在该配置下,任何子实体均无法关联上

@MetaValue ( value = "2", targetEntity = Org.class )

}

)

@Any ( metaDef = "adminMetaDef", metaColumn = @Column ( name = "ADMIN_TYPE") )

@JoinColumn ( name = "ADMIN_ID" )

private Admin mainAdmin;

则必须把所有子类的@MetaValue全部定义出来,否则在入库时元数据列ADMIN_TYPE保存为null,导致后续无法读取出mainAdmin属性。下面的补丁解决此问题:

public void nullSafeSet( PreparedStatement st, Object value, int index, SessionImplementor session )

throws HibernateException, SQLException

{

baseType.nullSafeSet( st, value == null ? null : getKey( value ), index, session );

}

private Object getSuperclass( Object clsName )

{

try

{

Class<?> c = Class.forName( clsName.toString() );

return c.getSuperclass().getName();

}

catch ( Exception e )

{

return null;

}

}

private Object getKey( Object clsName )

{

Object key = this.keys.get( clsName );

if ( key == null )

{

return getKey( getSuperclass( clsName ) );

}

return key;

}

应用上述补丁之后,加载对象后,发现mainAdmin属性的类型与其真实类型不对应,其类型是@metaValue中声明的Org类,下面的补丁解决此问题:

//修改此文件的130、210、216行,改为调用下面的方法:

private Object internalLoad( String entityName, Serializable id, SessionImplementor session )

{

//这边第三个参数改为true,即禁止了延迟加载,避免了代理对象类型不正确的问题

return session.internalLoad( entityName, id, true, false );

}

//上面的方法返回的对象不是代理,和原代码语义可能存在不一致(本人未验证)

<bean id="sessionFactory" p:dataSource-ref="dataSource" class="org.springframework.orm.hibernate4.LocalSessionFactoryBean">

<property name="packagesToScan">

<list>

<value>cc.gmem.mgr.*.model</value>

</list>

</property>

<property name="hibernateProperties">

<value>

hibernate.dialect=org.hibernate.dialect.Oracle10gDialect

hibernate.jdbc.batch_size=20

hibernate.show_sql=false

hibernate.format_sql=false

hibernate.generate_statistics=true

hibernate.transaction.factory_class=org.hibernate.transaction.JDBCTransactionFactory

hibernate.current_session_context_class=org.springframework.orm.hibernate4.SpringSessionContext

</value>

</property>

</bean>

<bean id="txManager" class="org.springframework.orm.hibernate4.HibernateTransactionManager"

p:sessionFactory-ref="sessionFactory" />

Leave a Reply